Floor Plan Recognition (FPR) is a fundamental discipline within artificial intelligence (AI) that focuses on converting static, unstructured 2D images into fully structured, machine-readable, and analysis-ready datasets. These input images can range from PDF scans to.jpeg,.png formats, and even CAD files.

The core problem that FPR solves is that architectural drawings are traditionally "static assets." They contain vast amounts of valuable information about geometry, materials, and relationships, but this information is "locked" in a visual format, inaccessible to computational analysis. FPR "unlocks" this data, making it usable for automation, regulatory compliance analysis, design trend analysis, and, most importantly, for automated Quantity Takeoff (QTO) and 3D model generation.

Historically, floor plan analysis was a painstaking manual process, requiring experts (architects, engineers, estimators) to spend hours manually tracing and measuring. This process was not only slow but also highly prone to human error. Early attempts at automation using rule-based approaches failed. Architectural drawings exhibit immense variety in styles, notations, fonts, and scan quality. The lack of a standard notation made it impossible to create a rigid set of rules that could reliably work across different plans. This failure created an acute need for more flexible, trainable systems, leading to the dominance of machine learning (ML) based approaches.

Text Recognition (OCR) – The Semantic Layer

The first component of modern FPR is the ability to read the text on a drawing.

Limitations of Traditional OCR

Traditional Optical Character Recognition (OCR) systems proved inadequate for the complex environment of architectural drawings. These systems are highly sensitive to scan quality. They often confuse similar characters, such as '3' and '8' or 'O' and 'D'. More importantly, they cannot handle the vast diversity of fonts, text orientations (text is often rotated), and the overlap of text with graphical elements, all of which are common in plans.

AI-Powered Intelligent OCR (I-OCR)

To solve these problems, Intelligent OCR (I-OCR), based on machine learning and computer vision, was developed. These systems are specifically designed for engineering drawings. Unlike traditional OCR, I-OCR can:

- Extract Specialized Information: It is trained to recognize not just letters and numbers, but also specialized technical symbols, dimensions, and industry-specific annotations.

- Understand Structure: I-OCR analyzes the drawing's layout to identify and preserve structural elements like title blocks, tables (e.g., material specifications or door schedules), and labels.

- Achieve High Performance: Through fine-tuning on technical datasets, these models can significantly speed up processing (in some cases up to 200x) and achieve high accuracy using engines like Tesseract.

Application of OCR in FPR

The ultimate goal of I-OCR in FPR is not just to extract text, but to make it useful. The extracted text becomes machine-readable, allowing drawings to be indexed, searched, and archived. Most importantly, it enables the semantic linking of text data (e.g., "Bedroom 1," "Concrete C25/30") with the geometric data extracted by CV, which is the foundation for integration with CAD and Building Information Modeling (BIM) systems.

Computer Vision (CV) – The Geometric and Spatial Layer

The second component of FPR is the ability to see and interpret the geometric and spatial elements of the drawing.

CV Tasks in FPR

Computer Vision (CV) uses deep learning models to perform two main tasks in FPR:

- Object Detection: This process identifies and places bounding boxes around discrete elements. It is used to find and count objects like doors, windows, plumbing fixtures, electrical outlets, and other special symbols.

- Semantic Segmentation: This is a more complex task that classifies every pixel of an image. Instead of just finding an object, it defines an area. For example, it "colors" all pixels belonging to "walls" with one color, "rooms" with another, and "corridors" with a third.

The Value of Computer Vision

The application of CV eliminates the need for meticulous manual tracing of lines and boundaries, which is one of the most time-consuming tasks in plan analysis. This allows for the automatic extraction of geometry for analyzing trends in residential layouts or for automated compliance checking against complex regulations.

An analysis of these two pillars shows that Floor Plan Recognition is inherently a hybrid problem. It is not just one CV task. It requires two separate but interconnected AI pipelines. The first pipeline (I-OCR) analyzes the symbolic or textual layer. The second pipeline (CV) analyzes the geometric or spatial layer. A successful FPR system, as confirmed in industry research, must combine "machine learning, computer vision, and OCR." The model that is good at recognizing walls is not the same model that is good at reading dimensions. True value is created when the system can semantically link the outputs of both systems: for example, connecting the text "Bedroom 1" (from I-OCR) to a specific room polygon (from CV).

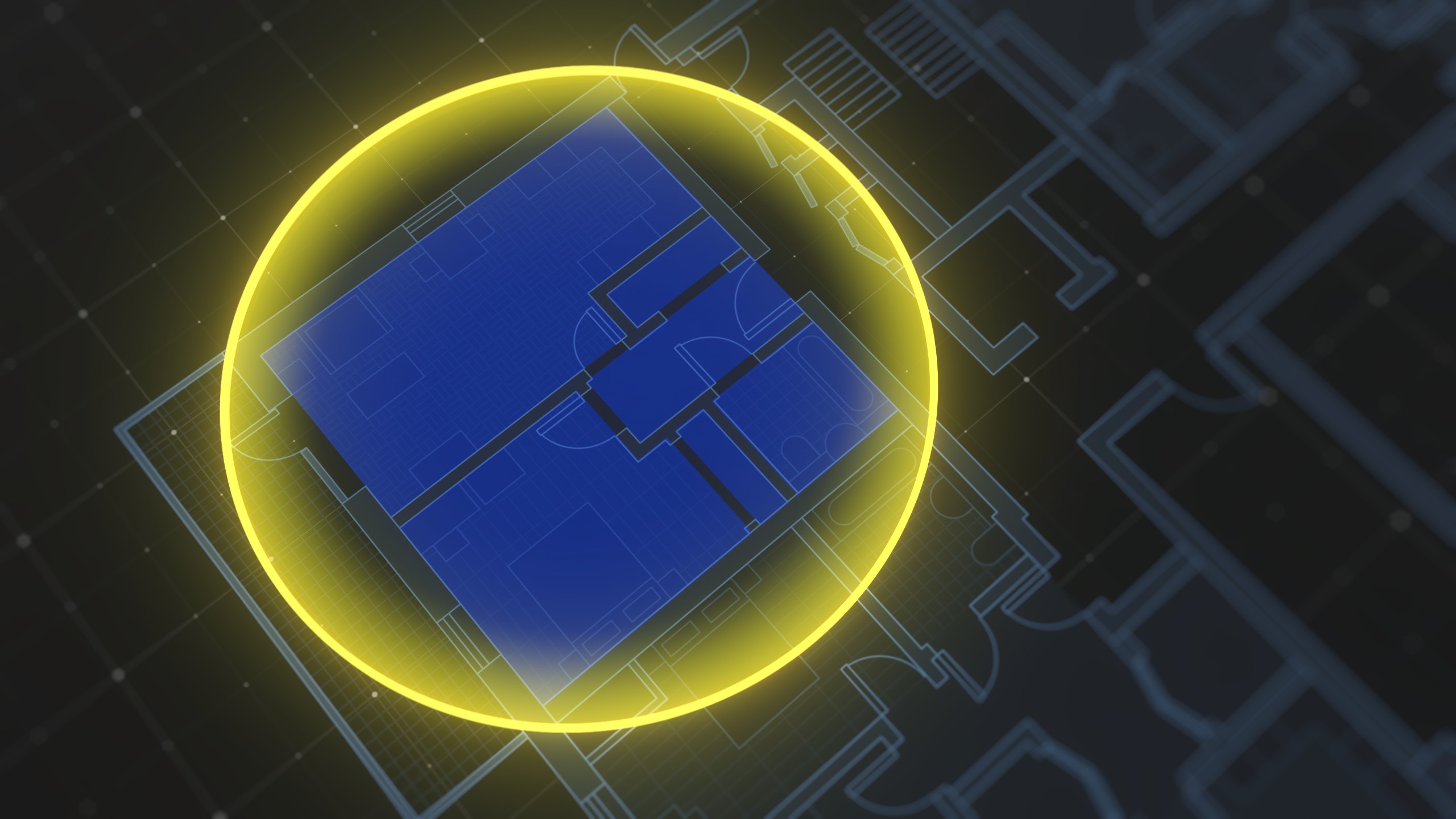

The FPR Technical Pipeline: From Raw Image to Structured Model

The process of converting a raw image into useful data follows a well-defined technical pipeline.

Image Preprocessing and Normalization

The performance of any machine learning model is directly dependent on the quality of the input data. Floor plans are often "dirty" data: scans can be low-quality, have artifacts ("noise"), be rotated, or contain unnecessary markups.

Key preprocessing techniques include:

- Image Quality Enhancement: Algorithms are used to improve the contrast and clarity of plans.

- Cleaning and Alignment: This includes noise removal, deskewing (straightening) rotated images, and cropping unnecessary areas.

- Patching: High-resolution or multi-scale plans are a major obstacle for many CV models. Dividing a single large plan into a grid of normalized "patches" allows the model to process plans of any size.

- Data Augmentation: To improve model reliability, synthetic datasets are often created (e.g., by artificially adding noise or generating new evacuation plans).

Interestingly, research has shown that "manual user preprocessing" (e.g., simple cropping or alignment) yields the "most notable improvement" in model performance. This highlights the importance of providing users with simple tools to "clean up" their drawings before running the AI pipeline.

Geometry Extraction and Vectorization

The next step is to convert raster data (pixels) into vector data (lines, points, polygons). This process, called vectorization, is fundamental because it translates the visual representation into a mathematical one that can be used in CAD systems or for 3D model generation.

Techniques include:

- Wall Centerline Extraction: Identifying the centers of walls to create clean linear geometry.

- Contour Detection and Polygon Approximation: Algorithms like SURF are used to detect contours (e.g., walls), and then polygon approximation is applied to convert these contours into distinct geometric edges.

- Reconstruction from 3D Data: In more complex systems, geometry may be extracted not from 2D scans, but from laser scanning data (LiDAR / Point Cloud) or by stitching together panoramic images.

Object Recognition and Room Segmentation

After extracting the basic geometry, deep learning models are applied to identify and classify specific structural elements.

- Wall Identification: Models are trained to distinguish between exterior and interior walls, which are often (but not always) depicted with thicker lines than other elements.

- Opening Identification: Systems detect doors and windows, which are critical elements for determining connectivity.

- Room Segmentation: By logically "closing" openings (e.g., replacing doors and windows with solid lines), the system can identify enclosed areas that represent individual rooms. Often, multi-task neural networks are used for this, which are simultaneously trained to recognize boundaries (walls, doors) and predict room areas.

Topological and Semantic Analysis

This final step is the most important and goes beyond simple recognition to understanding. It answers not "what is this?" but "how is this connected?".

- Topological Analysis: The floor plan is transformed into a graph. In this data structure:

- Nodes represent rooms or spaces.

- Edges represent paths or adjacency between these spaces, usually defined by the presence of a door or opening.

- Semantic Enrichment: After creating the graph, the system can assign functional data (e.g., "occupancy type") to each room-node. This is done by analyzing topological metrics (e.g., centrality, morphology) and linking them to the text extracted by OCR (e.g., the label "Kitchen").

The final stage of topological analysis is what distinguishes a simple "drawing digitizer" from a true "building analysis tool." A vectorized plan (Steps 2-3) is just a digital drawing, useful for quantity takeoff. However, a topological graph (Step 4) is a digital twin of the building's "brain" or "nervous system." It captures relationships and flows. Research explicitly describes the creation of a "3D topological model mapping spaces and their passage relationships through doors." The existence of this graph is a prerequisite for any high-level analysis, such as understanding functional flows, generating turn-by-turn navigation instructions, or using AI to generate new, functionally sound layouts.

Modern AI Architectures in FPR: The Technologist's Toolkit

To implement the complex FPR pipeline, a set of specialized AI architectures is used, each optimized for a specific task.

The Workhorses: U-Net and Mask R-CNN

Two architectures based on Convolutional Neural Networks (CNN) have become industry standards for the core CV tasks in FPR.

- U-Net (for Semantic Segmentation): The U-Net architecture is an elegant "encoder-decoder" model. The encoder compresses the image to extract features, and the decoder reconstructs it to full resolution. Its distinctive feature is its "skip-connections," which pass high-resolution feature information from the encoder to the decoder, enabling extremely precise boundary segmentation.

- Application in FPR: U-Net is ideal for pixel-perfect segmentation tasks, such as identifying all areas that are "walls" or "rooms." Variations like U-Net++, MDA-UNet, and FGSSNet have been developed to further improve boundary processing and feature merging.

- Mask R-CNN (for Instance Detection and Segmentation): Mask R-CNN is an extension of the popular Faster R-CNN object detector. It adds a third "branch" to the model, which, in addition to classifying the object and predicting the bounding box, generates a high-quality segmentation mask for each individual object detected.

- Application in FPR: This architecture is indispensable when the requirement is not just to find all doors, but to count and segment every individual instance of a door, window, toilet, or other element. It is often used in conjunction with a ResNet backbone for feature extraction.

The key difference lies in their output: U-Net answers the question: "Which pixels in this image are 'walls'?". Mask R-CNN answers: "Where are the 12 separate instances of doors, and what are the exact boundaries of each one?". For a comprehensive QTO, which requires both area measurements (U-Net) and element counts (Mask R-CNN), both capabilities are necessary.

New Horizons: Vision Transformers (ViT) and Foundation Models (SAM)

- Vision Transformer (ViT): Unlike CNNs, which have a "local" receptive field (they look at pixels in the immediate vicinity), ViT applies the Transformer architecture, dominant in NLP, to images. It breaks the image into "patches" and uses self-attention mechanisms to analyze the global dependencies between all patches simultaneously.

- Application in FPR: A building layout is inherently a problem of global arrangement. The relationship between the kitchen and the dining room is crucial, even if they are at different ends of the drawing. ViT excels at modeling this "overall layout" of rooms, which is a difficult task for CNNs. Hybrid models are emerging, such as ViLLA (Vision-Language Large Architecture), which combine ViT and LLMs for comprehensive analysis.

- Segment Anything Model (SAM): Meta's SAM is a "foundation model" trained on billions of data points to perform one task: promptable segmentation. It can generate an accurate mask for any object the user points to (with a click, box, or text), without prior training (zero-shot).

- Limitations in FPR: Despite its power, SAM is class-agnostic. It can perfectly segment an object, but it doesn't know what that object is (a door, a window, or just a smudge on the scan). Research shows that when applied to floor plans, it tends to "over-segment objects" and lacks the "semantically consistent masks" that domain-specific models provide.

Relationship Models: Graph Neural Networks (GNN)

As discussed, the end result of the CV analysis is often a graph. Graph Neural Networks (GNNs) are a class of machine learning models specifically designed to work with data represented as graphs. They are used after the CV pipeline has extracted the nodes (rooms) and edges (doors).

- Application in FPR: GNNs can analyze this graph to perform high-level tasks:

- Node classification (e.g., classifying a graph node as a "door" based on its connections).

- Analysis of complex spatial relationships between building clusters.

- Merging sparse geometric representations of individual rooms into a unified global geometry.

- Use as a basis for generative layout models (e.g., Graph2Plan), which generate new layouts based on an input relationship graph.

Interpretation Models: Large Language Models (LLM)

Large Language Models (LLMs) bring the missing element to FPR: semantic understanding and reasoning.

- Application in FPR:

- Semantic Interpretation: LLMs can "read" the unstructured text extracted by I-OCR (e.g., legends, annotations, specifications) and semantically link it to the geometric objects detected by CV. They can interpret natural language instructions ("the route must avoid the 'toxic' zone").

- Hybrid Generative Pipelines: New two-phase systems (e.g., HouseLLM, Layout-LLM) are emerging.

- Phase 1: The LLM interprets the user's natural language query ("I need a three-bedroom house with an open kitchen") and generates an initial sketch or topological graph.

- Phase 2: A CV model (often a diffusion model) takes this sketch as a "condition" and refines it, turning it into a precise, professional-looking plan.

- Natural Language Interface: LLMs are increasingly used as an interface, allowing users to "talk" to their drawings and request analysis in natural language.

Comparative Analysis of AI Architectures for FPR

A study of these architectures reveals two key points. First, the latest research in ViT, GNNs, and LLMs is shifting the focus of FPR from simple recognition ("what is this?") to advanced reasoning ("why is it here and how is it connected?"). Second, a critical contradiction arises: while general "foundation models" like SAM are powerful, they are proving to be less effective for specialized AEC tasks than domain-specific models. Industry benchmarks categorically state that general-purpose AIs are "not production-ready" for AEC.

This leads to a clear strategic conclusion: the most successful and resilient approach is a hybrid one. It must use domain-specific CV models (like U-Net and Mask R-CNN) to extract accurate, structured geometric data, and then feed this data into reasoning engines (like LLMs and GNNs) for semantic interpretation, analysis, and user interaction.

Applied Analysis: The Kreo Technology Stack in Action

Overall Architecture of the Kreo AI Engine

Kreo positions itself as a cloud-based platform designed for construction professionals, such as estimators, contractors, and architects. At the core of its value proposition is a hybrid AI engine. This approach is explicitly confirmed in the company's documentation, which mentions the use of both Machine Learning (ML) Algorithms and Natural Language Processing (NLP).

This dual-engine architecture allows Kreo to automate both sides of the FPR problem:

- Geometric Analysis (ML/CV): Automatically identifying and extracting measurements, identifying materials, and calculating quantities.

- Semantic Analysis (NLP): Understanding, interpreting, and extracting data from unstructured text, such as project specifications, contracts, and annotations on drawings.

Deconstructing Kreo's Features (Technology Mapping)

An analysis of Kreo's specific user-facing features reveals how these underlying AI technologies are applied in practice.

AI Scale

- Function: When a drawing is uploaded, the system prompts the user to set the scale. However, if the plan includes a graphical scale, the "AI Scale" feature can "automatically detect and apply the correct scale."

- Underlying Technology: This is a classic hybrid CV + Intelligent OCR task. A CV model (similar to an object detector) is trained to find the common visual patterns of graphical scale bars on the drawing. Once this area is identified, an I-OCR model (discussed in 1.2.2) is activated to read the text and numbers in that area (e.g., "1:100," "0m... 5m... 10m"). This seemingly simple feature eliminates the most frequent and costly source of manual error in quantity takeoff.

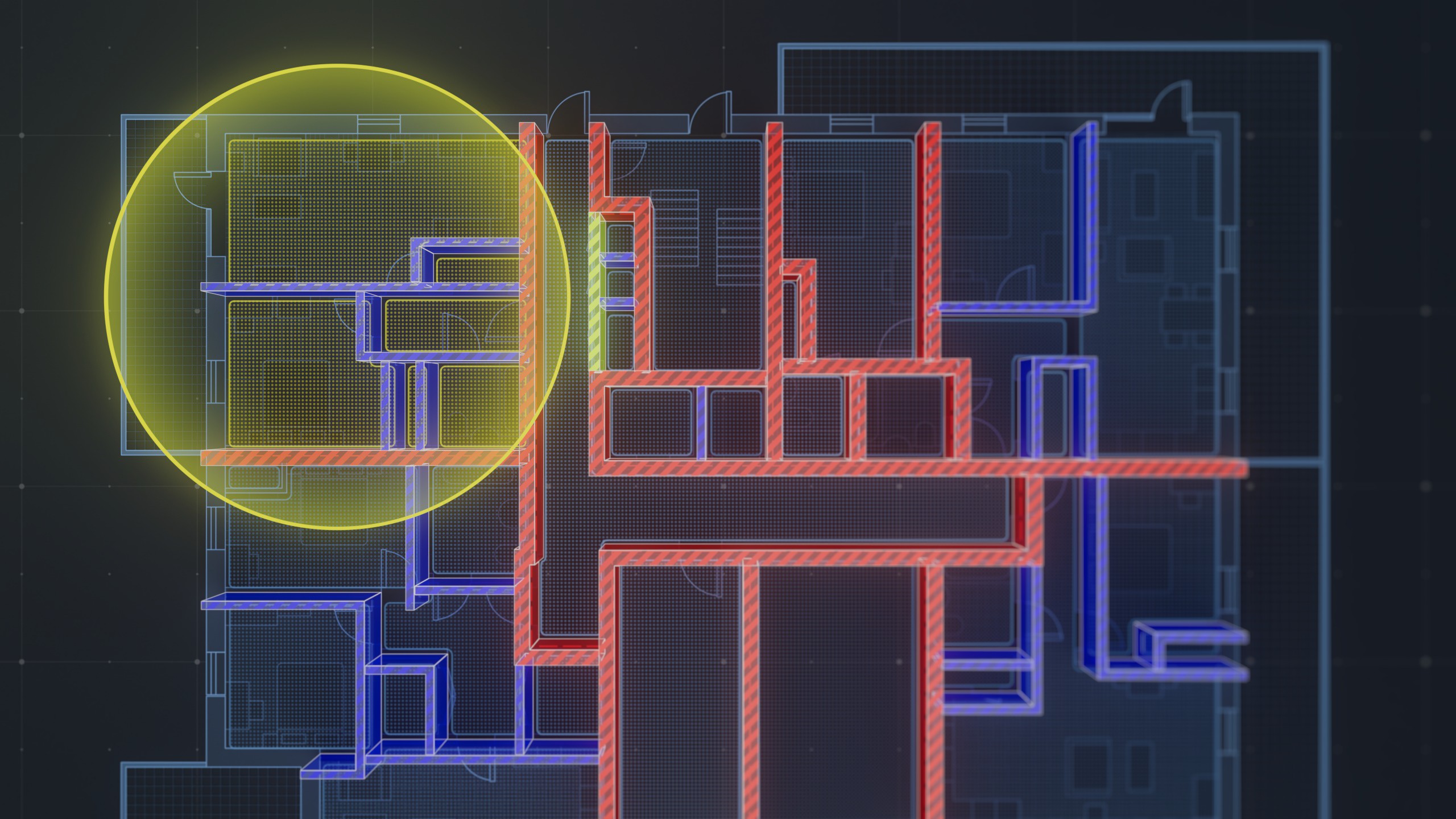

Auto Measure" and "One-Click Area

- Function: This is the platform's flagship automation feature. With a single click, the AI scans the entire drawing, "automatically detecting, classifying, and measuring key elements." It identifies room boundaries, calculates areas and perimeters, and distinguishes between walls, doors, and windows. Users confirm that this feature "significantly" speeds up the calculation process.

- Underlying Technology: This is the core of Kreo's CV pipeline. Its implementation almost certainly uses a combination of the two architectures discussed in Part 3:

- Semantic Segmentation (U-Net style): This model "colors" large continuous areas, such as room floors. This enables the "One-Click Area" feature, where a user clicks anywhere in a room, and the system instantly generates precise measurements for that entire segmented area.

- Instance Detection/Segmentation (Mask R-CNN style): This model is used to detect and count individual elements like doors and windows.

- The sophistication of these algorithms is evident from the description of their ability to "interpret complex corners" and "accurately snap to interior wall contours," which is necessary for obtaining precise measurements.

Find Similar with AI

- Function: Allows users to "find and measure similar areas" across the entire plan or even across multiple plans.

- Underlying Technology: This is likely an application of few-shot learning or one-shot learning. The user provides a single example (e.g., manually tracing a "Type A" apartment on a floor plan) and "trains" the model on the fly. The CV model then scans the rest of the document for other instances with a similar geometric signature or "footprint."

Caddie AI

- Function: "Caddie" is described as a "smart AI assistant" that goes beyond geometry to extract valuable information from the text on drawings. It is specifically designed to understand "hard-to-read" drawings, "annotations, legends," and can extract "tables, areas, room names" even from "scanned PDFs or handwritten plans."

- Underlying Technology: This is a direct application of NLP and, most likely, a domain-specific Large Language Model (LLM). While "Auto Measure" handles the geometry, "Caddie" handles the unstructured text. It takes the raw OCR output and applies semantic understanding to it. This capability allows Kreo to automatically "read and associate names with specific rooms" (e.g., linking the text "Living Room" to the polygon detected by "Auto Measure"). This connection between semantics (text) and geometry (shapes) is crucial for creating an accurate and detailed Bill of Quantities (BoQ).

Mapping Kreo Features to Underlying AI Technologies

An analysis of Kreo's product strategy reveals a highly effective separation of concerns. "Auto Measure" (the CV engine) and "Caddie AI" (the NLP/LLM engine) function as two separate but complementary "brains." This "dual-engine" architecture, confirmed in , is a significant strategic advantage. It allows Kreo to independently update each engine as technology evolves.

For example, the CV team can implement a new, more advanced segmentation architecture (e.g., an upgrade from U-Net to U-Net++) without touching or breaking the NLP engine. Conversely, the NLP team can swap the "Caddie" backend for a more powerful, modern LLM without requiring a rebuild of the entire CV pipeline. This modularity makes the system more flexible, scalable, and resilient to the rapid changes in the AI landscape.

Strategic Significance and Industry Context: Why Kreo is Winning

The Core Application: Revolutionizing Quantity Takeoff (QTO)

The primary application of Kreo's FPR technology is the automation of Quantity Takeoff (QTO). Traditional QTO is a manual, slow, and extremely error-prone process of counting and measuring every element on a drawing. Artificial intelligence, and FPR in particular, is the key technology for solving this problem.

Kreo's pipeline directly addresses this challenge:

- Quantity Extraction: CV features ("Auto Measure," "One-Click Area") automatically extract precise lengths (walls), areas (floors, walls), and counts (doors, windows).

- Semantic Matching: NLP features ("Caddie AI") read legends, specifications, and annotations to match these quantities with specific material and labor descriptions.

- Result: The platform automatically generates detailed Bills of Quantities. This leads to a reported 13.5x speed-up in the process and a significant increase in accuracy. More importantly, it changes the role of the estimator: instead of spending days on rote measurements, they can focus on higher-level tasks like risk analysis, cost optimization, and strategic consulting.

Benchmarking and Performance Reality: Domain-Specific AI vs. General-Purpose AI

The value of Kreo's approach becomes clear when compared to the performance of the latest general-purpose AI models.

The Problem with General-Purpose Models

The newest multimodal LLMs (models that can "see" images and "reason" about them, such as GPT-5 and Gemini) fail miserably at basic AEC tasks.

Key data comes from AECV-bench, a systematic test that evaluated the performance of 7 multimodal LLMs on 150 residential floor plans. The results were a "reality check":

- When attempting a simple door count, GPT-5 produced the correct result only 12% of the time.

- The best-in-class model (Gemini 2.5 Pro) achieved an average accuracy across all elements of just 41%.

- Accuracy for small but critical symbolic elements was extremely low: Doors (26%), Windows (14%).

The conclusion of this research is unequivocal: "General-Purpose AI Isn't Enough." Architectural drawings are a highly specialized visual language. Reliable recognition requires models trained on domain-specific datasets and tailored for domain-specific tasks.

Kreo's Strategic Positioning

Kreo is that domain-specific AI. Its models (like "Auto Measure") are not general-purpose; they have been intentionally trained on thousands of real-world construction plans, specifically for QTO tasks. This explains why Kreo can reliably perform tasks (with a reported 13.5x process speed-up) where the world's most advanced general-purpose models fail with 12% accuracy.

AI Performance Benchmarking in AEC

This comparative table clearly demonstrates that the investment in domain-specific training is a deep competitive moat that tech giants with their general-purpose models cannot easily cross.

Quality Management and the Role of "Human-in-the-Loop" (HITL)

Automation is not a "magic button." AI is not perfect. Challenges exist related to software complexity, integration problems with existing workflows, and the need for staff training.

Kreo openly acknowledges this in its own materials, warning against "Mistake #4: Relying Solely on Software." The Kreo platform is not designed to replace the estimator, but to augment them. It operates most effectively in a "Human-in-the-Loop" (HITL) workflow.

In this new paradigm, the role of the human expert shifts:

- From: Manual measurement and data entry.

- To: Data validation and auditing of AI-generated results.

The AI performs 90% of the rote work (measuring and counting), and the human expert quickly verifies the 10% (correcting errors, confirming classifications), freeing up their time for strategic analysis.

The Future Trajectory: From Recognition Tool to Predictive Analytics Platform

Kreo's long-term value lies not just in the AI engine itself, but in the data it generates. This HITL workflow creates a powerful, self-reinforcing feedback loop:

- Acquisition: Kreo offers a powerful AI tool ("Auto Measure" + "Caddie") that attracts estimators and contractors.

- Execution: Users process thousands of real-world drawings.

- Validation (HITL): Users correct the AI's mistakes as part of their quality assurance workflow.

- Training: These corrections are, in-effect, free, expert-level data labeling—the most expensive and time-consuming part of AI development.

- Improvement: Kreo uses this labeled data to continuously retrain and improve its models, making the tool even more accurate and valuable.

- Growth: A more accurate tool attracts more users, and the cycle repeats.

This is a classic "data flywheel" that builds a massive and ever-growing competitive advantage. The byproduct of this cycle is an enormous, structured, and expert-validated dataset of real-world construction projects and their costs.

This asset allows Kreo to evolve. Kreo's future is not just as a QTO tool. By leveraging this trove of "historical data," Kreo is perfectly positioned to build predictive cost models. The platform will be able to move from answering "What is the cost of this project?" to answering "What is the probable cost of a project you are about to design, and where are the greatest risk areas?"

The future trajectory is clear: deeper integration with BIM, the development of "agentic workflows" where AI performs multi-step tasks, and leveraging its unique dataset to become the leading platform for predictive cost analytics in the construction industry.

.jpg)

.png)